Deep-Live-Cam: High-quality deepfake avatars, free for anyone

When Deep-Live-Cam first launched in April of 2024, it created immediate shockwaves: Only weeks earlier, Microsoft teased its VASA-1 research that creates realistic gen-AI generated videos from just a single image, with no immediate intention to productize it.

What is Deep-Live-Cam?

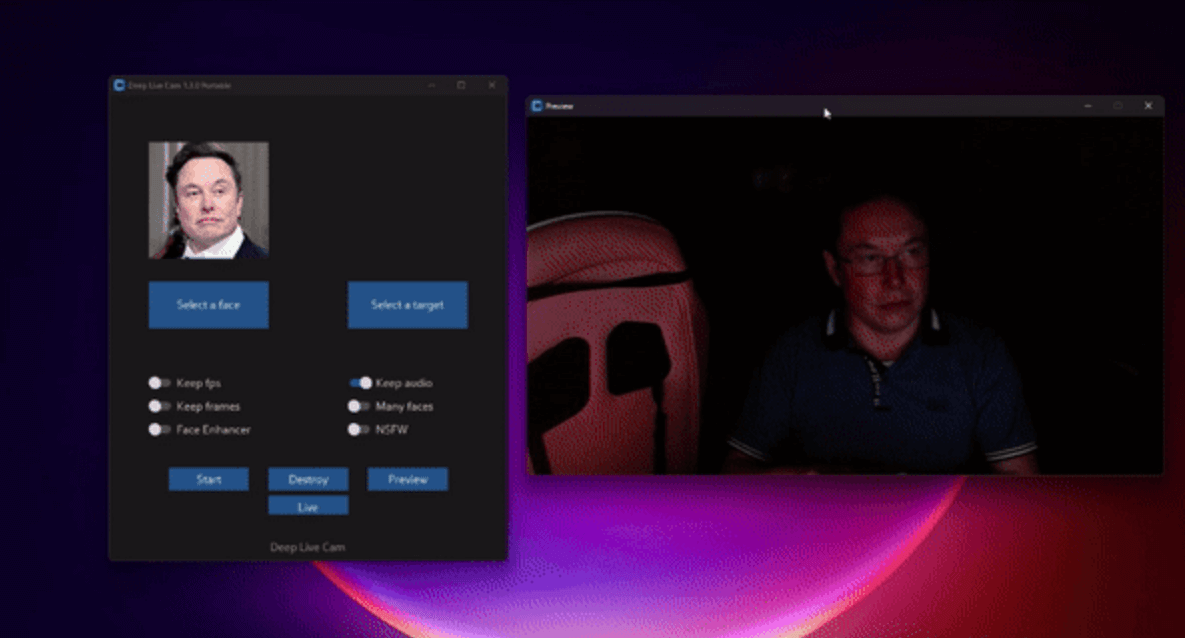

Deep-Live-Cam is an open-source tool, available for free on GitHub, that allows anyone to create real-time face swaps of high quality, look 3D and lifelike, and with only a single source image required - available right now and free of charge. Designed as a creativity tool to empower artists with a simplified animation process, crafting unique avatars and improving productivity for creative professionals.

But just like you, who reads this blog on cybersecurity, our first thought was that Deep-Live-Cam has ushered in a new era of AI-generated content and, with that, the obvious and truly significant challenge of deepfake detection: With even OpenAI shutting down its own Gen-AI detection tools in the past due to disappointing ability to detect its own creations, it is the current state of technology that even the leaders of GenAI technology struggle to reliably identify its own outputs - which makes us wonder where that leaves companies that offer Deepfake Detection tools, let alone their enterprise customers who need to be able to detect Gen-AI content to protect their customers from social engineering and identity theft.

For this blog, we created a video using the Deep-Live-Cam library and tested it with the popular “Deepware Deepfake Video Scanner,” which is the top Google result for deepfake scanners or detection tools as of December 2024.

How did detection algorithms perform against Deep-Live-Cam?

First, I uploaded a video generated with Deep-Live-Cam. Deepware Scanner then uses four different detection algorithms, each of which outputs a probabilistic score of how likely the uploaded video is to contain a deepfake.

Here’s how the detection tools fared:

1. Avatarify Detection

- Result: NO DEEPFAKE DETECTED (1%)

- In production, this algorithm would result in 99% false positives.

- The Avatarify algorithm showed a negligible 1% chance that the video was a deepfake, nearly certain it was authentic.

2. Deepware Detection

- Result: NO DEEPFAKE DETECTED (3%)

- In production, this algorithm would result in 97% false positives.

- Deepware flagged only a 3% probability of manipulation, indicating high confidence in the video’s authenticity.

3. Seferbekov Detection

- Result: NO DEEPFAKE DETECTED (28%)

- In production, this algorithm would result in 72% false positives.

- This model performed the best among the four. However, it still has a significant failure rate that would be unacceptable if used to detect fraud.

4. Ensemble Detection

- Result: NO DEEPFAKE DETECTED (9%)

- In production, this algorithm would result in 91% false positives

- The Ensemble model, which combines multiple detection approaches, detected only a 9% likelihood of deepfake content.

These results reveal that Deep-Live-Cam can create videos so convincing that existing algorithms fail to classify them accurately, even when specifically designed to identify fake media.

Why Is Deep-Live-Cam So Convincing?

1. Real-Time Performance

Deep-Live-Cam creates deepfakes at high framerates in real time to be shared like a simple Snapchat filter, except that it creates complex, three-dimensional impressions of the impersonated avatar.

2. Micro-Expression Precision

The technology captures and reproduces subtle expressions like blinking, lip movements, and lighting variations with uncanny accuracy. It employs neural networks that refine artifacts in real-time, eliminating common cues such as unnatural movements or lighting mismatches.

3. Stream-Friendly Outputs

Videos streamed or compressed for live settings add noise that actively masks telltale signs. Accurate deepfake detection highly depends on the video quality, but there are many legitimate reasons why real video streams may have noise or compressed quality, further complicating detection.

The Implications of Undetectable Deepfakes

1. A Looming Trust Crisis

Videos have long been considered credible evidence, but tools like Deep-Live-Cam undermine this trust rapidly. If a court case is presented with crucial evidence in the form of recorded video, but that video could have been manipulated by an intentional impersonator, how would we decide whether to dismiss it as evidence when detection tools fail to keep pace?

2. Existing Fraud Risks are Amplified

Undetectable deepfakes already have and will increasingly enable fraud, impersonation, and misinformation campaigns, posing significant risks to cybersecurity and public trust. We will face the same trust challenges as before but amplified by fraudsters’ ability to convincingly impersonate anyone and a lack of tools to accurately scan for such fraud attacks.

3. Regulation will be Reactive

Existing laws and policies struggle to address the ethical and legal implications of real-time, undetectable deepfakes. An example of this is that “biometrics” are not considered PII (Personally identifiable information), unlike e.g., your social security number. While it sounds surprising in the context of deepfakes, the reason for biometrics (like your facial likeness) not being PII was that you can claim your name or SSN is that of another person, but it’s impossible to claim your face looks like the face of the person next to you - this reality has drastically changed in the context of real-time deepfakes and remote work.

What’s Next?

As detection of technology like Deep-Live-Cam falls short, companies need to adopt a multi-pronged approach to ensure security while regulations and technology catch up:

Protect the Root:

- Safas Secure Camera to prevent content injection: We wouldn’t write a blog if we didn’t have something relevant to contribute. Our Secure Camera’s purpose is to certify the authenticity of the footage as it exits the camera all the way to the application in which it is displayed, effectively removing any open door for inserting deepfakes or virtual cameras into the loop, for impersonation or other reasons.

Authenticate the material:

- Biometric authentication of meeting participants: Embed biometric verification protocols before admitting users, for example, by using authenticators or face biometric authenticators that need to be passed before a participant can join confidential meetings. Biometric authentication relies on probabilistic scanning, so there is the risk of false positives (undetected deepfakes). While we can expect deepfake detection models to improve, it is a race of AI models against GenAI models, and it is recommended not to rely on it exclusively.

Raise awareness internally

- Any CISO and cybersecurity advocate knows this: Most fraud succeeds when the victim is not questioning an unusual request or is unaware of the red flags that should make them second-guess an action. Education about what is possible is crucial. One approach can be to share blogs like this one to educate your staff on how convincing deepfakes already look and how cheap and easy they are to create. Ensure that no staff member believes deepfakes are either exceptionally rare and they’d probably recognize one right away.

Conclusion

Deep-Live-Cam showcases the immense creative potential of AI, and with that, also provides an eery preview of the tools that are available to fraudsters today in creating highly realistic synthetic media.

This blog demonstrated how the inability of tools like Avatarify, Deepware, Seferbekov, and Ensemble to detect Deep-Live-Cam presents a dangerous challenge for society. As deepfake technology becomes increasingly sophisticated, so must our efforts to develop detection, authentication, and regulatory solutions.

Safas is currently in closed beta. Join our waitlist for a trial.