What Happens When You Can No Longer Trust What You See?

You don’t have to be as old as I feel to remember the days when we used to say things that implied our eyes were the ultimate source of reality. “I saw it with my own eyes!”, we would say to suggest that what we saw must have been real. However, our lives have shifted from real interactions to increasingly taking place online, and if you work remotely, like I do, your entire work life is supported by Zoom meetings. While convenient for work-life balance, trading in your physical presence in the office for a digitally transmitted image of yourself also introduces a major vulnerability - seeing is not believing in cybersecurity. In the era of online disinformation and deepfakes, impersonation fraud is more cutting-edge today than it has ever been before. In this blog, we unpack the problem - and propose our approach to pulling the rug beneath AI-powered impersonation fraud (teaser: it’s not by using even more AI).

The Crisis of Trust in Digital Communication

As remote work and virtual interactions became the norm, we placed our trust in screens. Seeing our cameras as mere tools, we assumed we could trust that a camera shows exactly what it’s supposed to show: the person on the other end of the video call. With deepfakes and live face swap techniques unlocked by generative AI tools, this is no longer guaranteed - but deepfakes look… “odd”, we can typically recognize them, can’t we? As you can guess by the way I phrased that question, it would be careless and naive to assume humans can reliably outperform a technology that has been evolving at hyperspeed - That assumption is dangerous, as generating photorealistic AI-video based on a single image of a person is as easy as signing up for apps like Grok or Sora 2 (as of October 2025). The pace of innovation is stunning - the first time that a realistic single photo to video was demonstrated was in Microsoft’s VASA-1 research model in April 2024, and commercialization has hit only a year later from various other models, and in even better quality.

Generative AI has flipped the Trust Equation

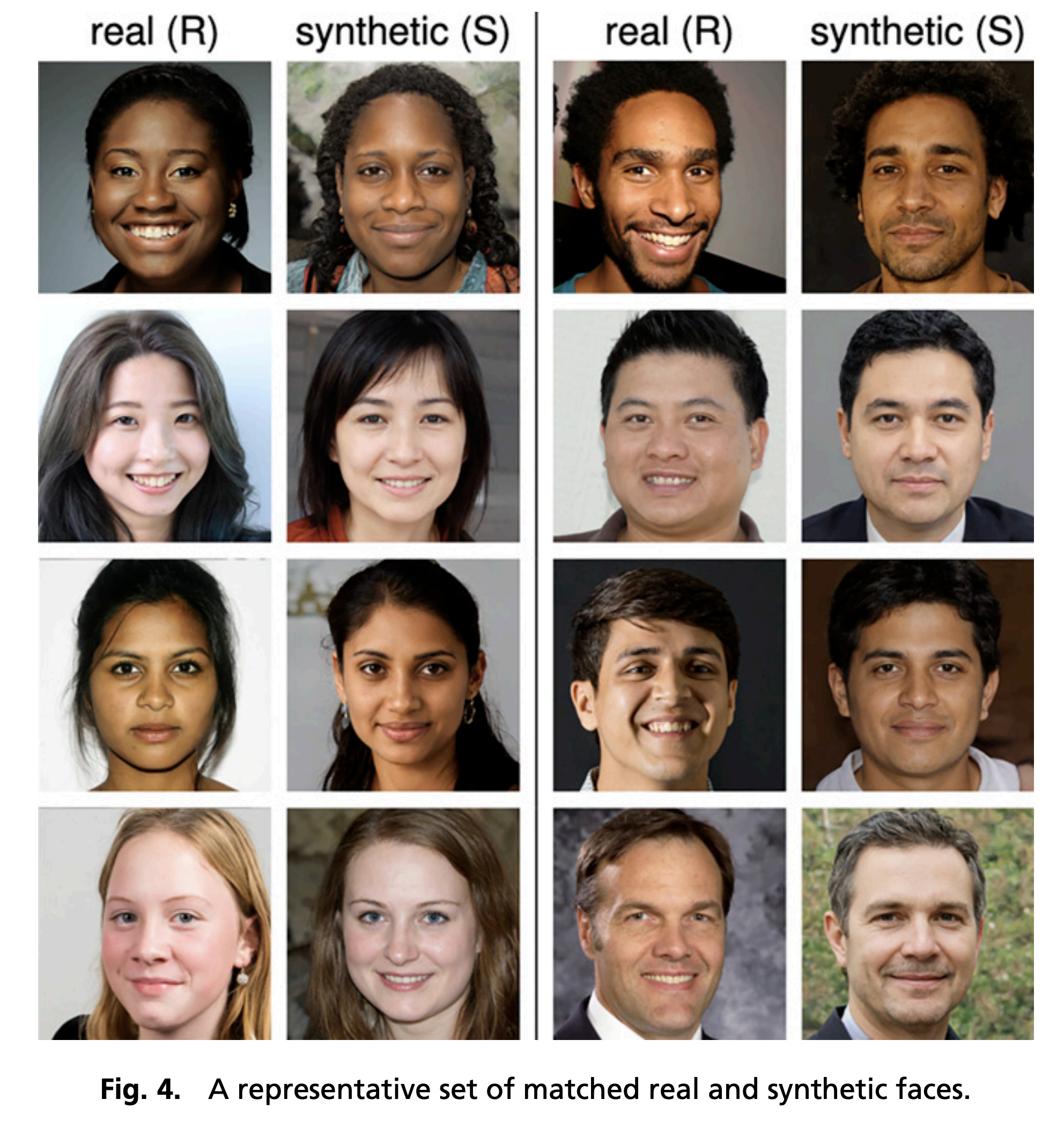

To demonstrate the limitations of our own human intuition, one study asked respondents to rate portraits based on how trustworthy they found those faces. Without knowing which faces are AI-generated and which aren’t, respondents trusted the synthetic faces 7.7% more on average (see original paper, page 2). All in all, this demonstrates that our human judgment is no longer a reliable means of determining whether a video is real and whether we can trust the image.

Since these problems aren’t the future, we need solutions immediately. The brutal reality is that there aren’t easy and definitely not perfect solutions - this is a rapidly developing situation. So what can we do?

Where Does Verified Trust in Video Start?

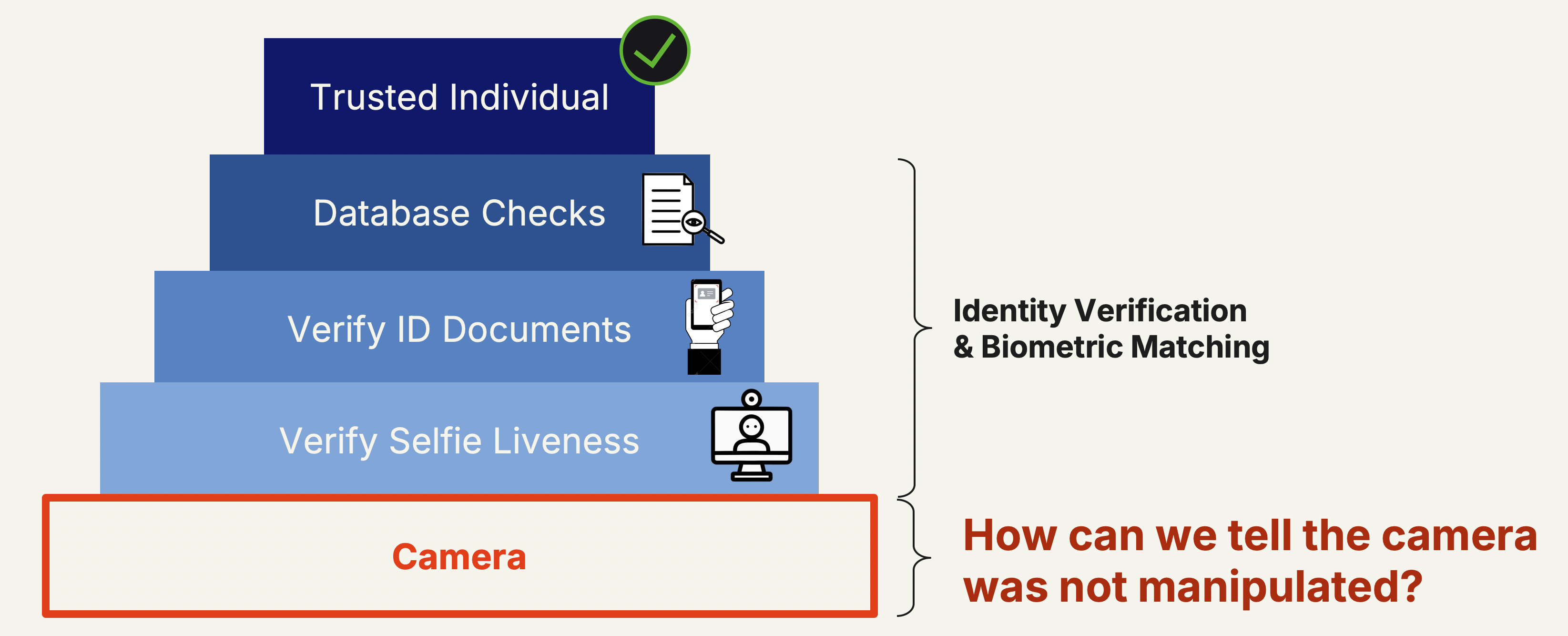

Enterprises like banks, car rental companies, and payment apps nowadays verify people’s biometric identities before allowing them to recover their accounts or apply for new credit cards.

This trust usually involves taking a photo of your government-issued ID and a selfie of your face, with the ID photo potentially being biometrically matched against your selfie. The mechanisms here are comprehensive and usually involve highly sophisticated and well-trained machine-learning models that evaluate the input for signs of anomalies in the image that could indicate the presence of digital manipulations, deepfakes, physical masks, or printouts shown to the camera. But after all, these algorithms evaluating for fraud expect real camera footage - but how can they tell? After all, a camera can be replaced by a virtual camera, which can replace real footage with a deepfake filter provided by software in real time. Another angle for fraudsters is to feed the real person’s pre-recorded video as if the camera captured it live, used for taking “selfies” as the victim, or to disguise oneself and attend confidential meetings after gaining access to a company’s meeting calendar.

The problem: The most amazing tools are trusting a vulnerability - the device camera.

The problem: The most amazing tools are trusting a vulnerability - the device camera.

Deepfakes can now be generated in real-time using off-the-shelf libraries like Deep-Live-Cam and a LinkedIn photo. And installing a free GitHub library is already just “the hard way”: there are also end-user-friendly, commercial tools like Viggle.AI allow live-streaming of deepfake-filtered impressions of yourself for a very low monthly fee of $20 per month. For a serial fraudster, this is a very acceptable cost of doing business.

"Yes, but we will wait until there's a mature solution for this."

Shockingly, this is a verbatim quote we heard from a Risk leader at a major financial institution. And we understand: sophisticated deepfake fraud is already technologically possible, but it has not yet reached a scale that warrants widespread panic. That said, these tools have only been around for a year or less. So far, we've only seen a small number of tools, most of which are run by private enthusiasts. Yet, the headlines of deepfake fraud have also multiplied massively in recent years, and analysts from leading consultant firms predict that GenAI will add billions of Dollars in fraud losses to existing predictions for the next few years. The problem is happening right now, and when it’s “common”, there will be no surefire solution:

-

Bank managers need to triple-confirm every wire transfer by phone.

-

Telehealth professionals will second-guess whether patients are real before prescribing medication.

-

Courts start questioning whether the witness on camera is even a person.

If we don't solve this problem, everyone pays a price: Not necessarily because everyone is scammed all the time, but time-consuming and cumbersome security workarounds may become commonplace, as showing your face on camera no longer holds any significance.

This turns trust into friction, and friction kills business and adoption of safe practices.

What can be done?

We don’t need more guesswork. We need certainty. The identity industry is working hard on ensuring trust, as we can see with innovations such as facial recognition to enter the subway in Osaka, or to board a plane in the US. What’s noteworthy here is that these places obviously have a very high degree of control over ensuring the cameras are real and not intercepted by a fraudster after capturing the traveler.

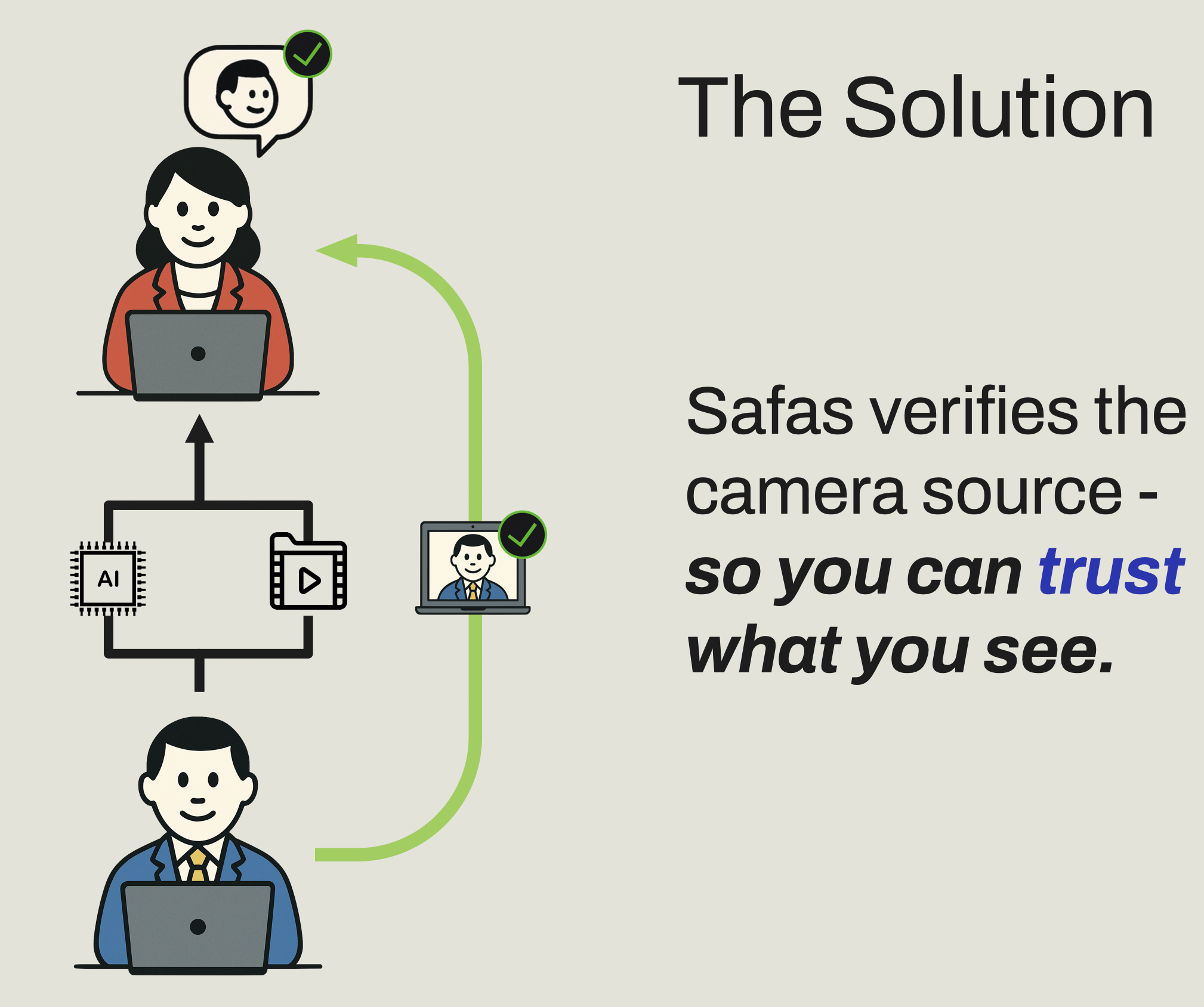

The vision of Safas is to establish a “secure line” for camera streams, ensuring that what the camera shows on screen is the same as what's captured by the physical camera.

We know trust starts at the source, and in the fight against deepfakes, injected recordings, and other manipulations, the source is the camera. Suppose we can say with absolute certainty that the camera footage is authentic. In that case, we eliminate the presence of any manipulations, whether it's the most sophisticated deepfakes or a simple injection of a prerecorded video of the victim.

We at Safas believe in a world where video feeds can be proven real in real-time, continuously, and invisibly without any friction for end-users - the key to adoption.

Safas is currently in closed beta. Join our waitlist for a trial.